Book-tracking

Mobile App

2023

A new chapter in

book-tracking

How might we improve the user experience of

book-tracking mobile apps?

Leisure readers need updated options for book-tracking systems that more effectively represent their wants and needs, and enhance their reading experience.

ROLE

UX Designer in collaboration with

one other

SCOPE

5 months

TOOLS

Figma

Google Survey

Microsoft Teams

Goodnotes

Microsoft Office

Usability heuristics

UX Honeycomb

PROCESS

Design Thinking

Desktop research

Competitive analysis

Surveys

Semi-structured interviews

Card sorting

User journey mapping

Affinity mapping

User archetypes

Independent & group ideation

Wireframing/prototyping

Thinking aloud testing

AB testing

Usability heuristic assessment

Jump to a section

6. Ideating

7. Prototyping and Testing

8. Final Prototype

9. Final Testing

10. Closing Thoughts

11. What I’ve Learned

Context

Book-tracking is — at its core — a cataloguing system for the books one has read and wants to read. It also promotes reflecting on and reviewing books by documenting one’s evaluation and thoughts.

With the progression of the tech revolution, book-tracking has become accessible in the palm of one’s hand, essentially creating a digital library that can fit neatly within the reader’s pocket. This transformation empowers modern readers with a convenient way to organize and share their interests, monitor their reading habits, and discover new books.

The problem is…

book-tracking users predominantly rely on a single app, Goodreads. The platform has seen minimal updates and innovation since its acquisition by Amazon in 2013, leaving users with a suboptimal and outdated user experience. Competing book-tracking apps struggle to match the success of Goodreads in harboring an extensive user base.

This leaves an opportunity…

to explore why Goodreads maintains so much success despite its shortcomings and to design a book-tracking user experience that is more intentional and intuitive than current solutions.

Approach

In this project, my partner and I followed a design thinking framework. While it may appear linear, we were constantly jumping forward and backward between phases, and often working within more than one phase simultaneously.

Researching

existing

solutions

We began our process by wanting to better understand the current market of book-tracking mobile apps. To do so, we investigated some of the top competitors as suggested by the Insightful Reading blog and the iOS App store. What we found was a mixture of dedicated book-tracking apps, digital reading apps with book-tracking functionalities, and simple note-taking apps. Similarly, it was important for us to acknowledge that manual journaling, while not digital, is also a common method of book-tracking and should be considered in our research.

Goodreads (dedicated book-tracking app)

StoryGraph (dedicated book-tracking app)

Bookly (dedicated book-tracking app)

Kobo Books (digital reading app with some book-tracking functionalities)

Apple Books (digital reading app with some book-tracking functionalities)

Libby (digital reading app with some book-tracking functionalities)

Amazon Kindle (digital reading app with some book-tracking functionalities)

Notes (app that enables manual book-tracking)

To gain an initial understanding of how the mobile apps compare to one another, we examined each platform in the US region’s App store and noted:

the average rating,

the number of ratings,

the offerings/features as listed in the app’s description

We then summarized these initial findings of their advertised features in a competitive analysis table to provide a general overview of key differences.

User research

-

We created an online survey using Google Forms and distributed it through a number of social circles and various message boards associated with my University.

The survey began with demographic-based questions, then proceeded to inquire about people’s reading interests and behaviors. If participants indicated that they were currently using or had previously used book-tracking, more relevant questions followed. -

The goal of our user interviews was to gain a deeper understanding of the users beyond the online survey. We recruited 5 different readers through our university’s message board, Facebook group, and Whatsapp group messages.

All interviews lasted around 45-60 minutes, took place in person at my university, and included snacks and drinks.

The interview guide resembled the questions we included in the survey. We also incorporated multiple card sorting activities with each interview to allow users to visually group certain aspects or features, depending on their own priorities.

The 3 card stacks included common features of book-tracking, potential statistics about personal reading behavior, and influential factors that affect book selection. -

We also decided to analyze the App Store reviews of Goodreads (the most popular dedicated book-tracking app as suggested by various book-tracking articles, the App Store, and my user survey results).

We did this using a JavaScript script and the open source library app-store-scraper to collect reviews. Then we used Python to implement the following 5 methods of analysis: sentiment analysis, keywords, key phrases, GPT-3, and word clouds.

Our goal with this analysis was to gather a large database of app-specific opinions that each includes both a score and written feedback, providing both quantitative and qualitative data.

We also recognize that people who write reviews are often those with strong opinions about the app, which should be taken into account when considering the range of feedback.

In order to best process the data and insights gathered, we first outlined a typical reader’s user journey, inspired by Prateek Agarwal, in an online Figma whiteboard. We then documented the key statements and findings on digital post-it notes and matched them to relevant phases of the user journey. Within each phase, we then used affinity mapping to further cluster the insights.

Some key findings from

our user research

Most book-tracking users use Goodreads and don’t know about alternative methods.

Users spend the most time discovering new books, organizing books into collections, and rating/reviewing.

Users highly value books recommended by friends, but don’t use the apps to do so.

Many users desire an updated UI of their book-tracking method.

Most Goodreads users want a “Did not finish” shelf.

Goodreads users want more control over the privacy of their shelves and reviews.

Users would like more flexibility in searching, sorting, and filtering the books and reviews on Goodreads.

Users find the general five-step rating scale insufficient for expressing their opinions properly.

Many Goodreads users find writing book reviews intimidating.

Quotes from the

interview participants

“I guess it’s [reading is] mostly in like periods where if I start something it might be like everyday, usually in the evening, or maybe after dinner or something, or maybe on the bus.”

“It’s [reading is] more of a personal experience”

“I think for me it started as a challenge. How many books can you actually read?”

Developing the archetypes

To do this, we first identified various dimensions in which readers’ characteristics diverged significantly in two or more ways. From this assortment, we narrowed down the number of dimensions to six by assessing in which dimensions the disparities between readers’ characteristics appeared most often and had considerable impact on the reader journey.

We then developed specific combinations of the reader characteristics within the six dimensions based on typical and often repeated patterns we observed in the readers we surveyed and interviewed, resulting in our five reader archetypes.

Read more about the 6 dimensions of

reader characteristics below

-

We found that readers exist on a spectrum of how social they are with their reading. On one end, Social readers are more likely not only to discuss the books they've read, but also to be interested in seeing what their friends are currently reading, and to publicly rate or review books.

On the opposite end of the spectrum, Intimate readers view reading as more of a private experience with themselves. They might still analyze or contemplate the books but only independently. -

We find that most readers fall into one of the following three categories in regard to their reading frequency: Occasional, Frequent, and Binge readers.

Occasional readers spend two days per week or less reading.Frequent readers are the most consistent, reading more or less everyday.

Binge readers tend to go through periods of reading often and periods of reading very little, e.g., they might read daily for two weeks and then stop reading for two months.

-

The vast majority of readers in our research reads print books whether exclusively or inclusively with other formats. The largest difference that we saw was whether they read digital formats (e-books, audio-books) in combination with print books (Combination readers) or exclusively print books (Print-only readers).

-

On one end of the continuum, Active readers are more likely to annotate, take notes, do external related research, and engage with the book outside of just reading.

On the other end, Passive readers tend to stick simply to reading and internally digesting the content of a book without taking external action.

-

As implied in the expression “reading for pleasure'', we confirmed that all readers seek out enjoyment, relaxation or entertainment from reading. We identify these as Recreational readers.

However, we found that some readers exhibit additional layers of purpose behind their reading, whether it's to learn something new, to meet a target number of books, to develop oneself, etc. We refer to those readers as Goal-oriented. -

Readers' book preferences range greatly, which makes categorizing readers by preferred individual genres infeasible. However, we managed to group them into three broad taste categories: Mainstream, Fixed, and Exploratory readers.

Mainstream readers tend to follow common trends, bestseller lists, or generally popular and well-known books.

Fixed readers seek and enjoy specific qualities in the books they read, rarely venturing into new territories of books; their choices can be based on, e.g., book genre, topic, or author.

Exploratory readers are somewhat opposite of Fixed readers, as they enjoy exploring books completely new to them and outside their “reading comfort zone''.

During our user research — especially during the interviews — we began to note that participants were polarized on a number of behaviors, needs, and goals, such as wanting to discuss books with friends or taking part in reading challenges. To account for the differences we came across, we decided it would be valuable to establish various archetypes to further guide the design process.

These readers are generally proactive and serious about their reading practice. They do not usually feel confined to one set of genres and they are eager to learn from the books they read or gain new perspectives.

These readers are considered slightly more conventional. They appreciate the physicality of print books and the sensory experience that goes along with them. They are not often concerned with sharing their reading experience with other people and while they read frequently, they prefer to not add further pressure to their reading by attaching additional goals or expectations to it.

Readers who are Trend-seekers are generally tuned in to social media and are thus social readers by nature. These readers are often relying on recommendations online and bestseller lists to choose their next book. They can be driven by various goals such as being able to discuss popular books with friends or hitting certain reading goals by the end of the year.

These readers are those who read occasionally with the goal of bettering themselves in some way, whether professional, personal, or otherwise. They tend to see reading as more of an intimate experience and gravitate towards fixed categories, such as self-development books.

These readers are essentially new to the hobby but are making an effort to improve their habits over time. While some novice readers may like the social aspect of reading, many consider reading to be a more personal activity. They also generally tend to read popular or mainstream titles, as these can be considered more "trustworthy" to Novice readers.

Revisiting the

existing solutions

During our research, we developed a better understanding of the features and characteristics of book-tracking systems that users find most valuable. We decided to use this updated insight to refer back to our study of the current solutions and further expand the competitive analysis table we had previously created.

Refining this table allowed us to better ascertain where the opportunities lie for improving the user experience of book-tracking.

Interestingly, we found that StoryGraph addresses many of the recurring complaints users made about Goodreads by including desired features, such as half-star ratings and a “Did not finish” collection. This led us to explore the book-tracking app and its reviews further, until we noticed that a number of these desirable elements are underdeveloped. We suspect this is a result of attempting to implement too many features too quickly, without taking the necessary time to test and refine each.

This was an important wake-up call for us.

We realized that if we wanted to truly improve the user experience of book-tracking in the span of five months, we needed to narrow our scope and focus our efforts.

Specifying the problem

From our research, we learned that managing collections and evaluating books are two of the core functionalities of book-tracking. Managing collections is often overly complex, limited, and unclear. The process of evaluating books is either considered rigid and inflexible or difficult to navigate and confusing, depending on the platform used.

With this in mind, we narrowed our scope and specified our problem statement to ask:

How might we make managing collections and evaluating books more intuitive, flexible, findable, and easy to navigate for both new and existing book-tracking users between the ages of 18 and 35?

These terms (intuitive, flexible, findable, and easy to navigate) came from our understanding of the users’ needs, which we later also validated using Peter Morville's Honeycomb as they overlap.

Ideating

During our user research, we maintained an “idea parking lot” to quickly document ideas that sparked either within us or within the users we connected with. We then revisited this document afterwards as we shifted further into the ideation phase of our design process.

My design partner and I then used these initial ideas to stimulate independent brainstorming sessions (see left) on how to improve the collection management system and the book evaluation process, which evolved into a collaborative brainstorming session where we also began clustering the ideas by theme (see right).

We then used these clusters to guide a selection of ideas that we were most curious to quickly test with users.

Prototyping

and testing

Our first instinct was to sketch out various ideas and obtain feedback from users to quickly figure out which ideas might be worth pursuing and developing further. I did this by hand-sketching while my design partner used Inkscape.

We ultimately generated a total of five iterations of our solution by following a build-measure-learn cycle.

Our user-testing plan

Apart from the first round of testing, which was informal and discussion-based, our typical user-testing plan for the remaining four iterations involved an introduction to the project, an archetype survey, thinking aloud tests, AB tests, and an open discussion.

Having users complete an archetype survey allowed us to classify them based on the five archetypes we had outlined, which provided us with better context into their feedback and how our prototypes were viewed by different types of readers.

The thinking aloud tests allowed us to present users with various tasks, which they then attempted to carry out while vocalizing their thinking process. We were also able to observe their interactions with the prototypes to better understand what worked well and what didn’t.

One of the benefits of working with other designers is that we were both naturally prone to envisioning the same initial idea in different ways. We took advantage of this by consistently testing out multiple versions of different features through AB testing and observing/discussing how users interacted with each version.

Archetype survey template

Let’s walk through a brief example

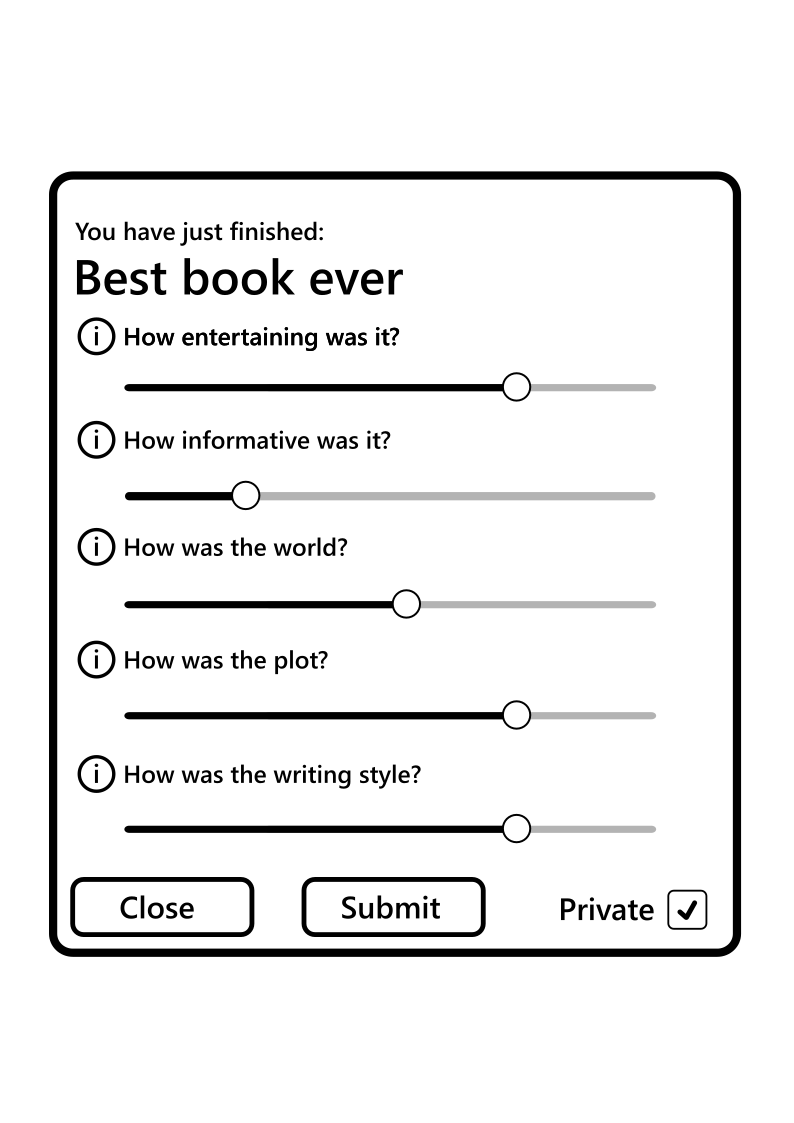

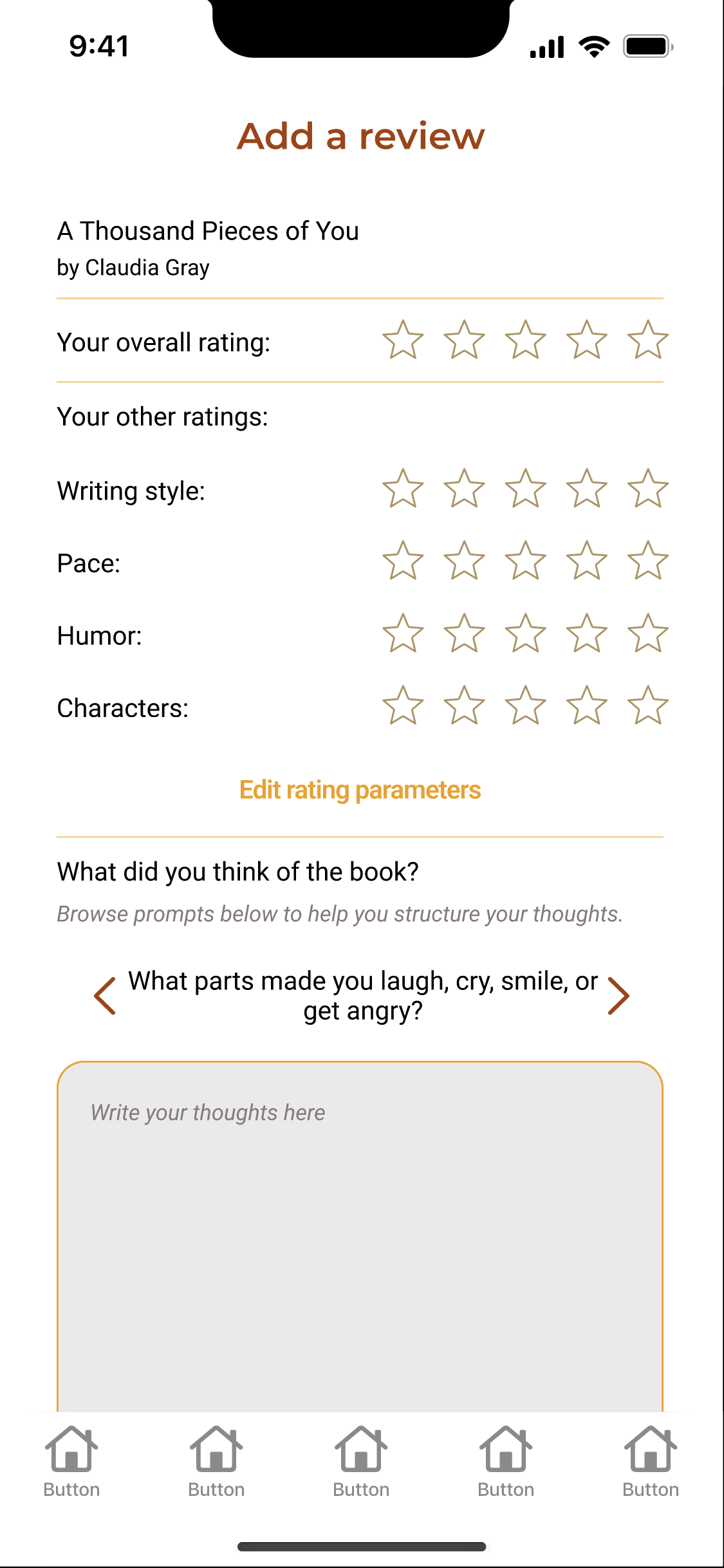

Here I’ll showcase a short example of an AB test we performed with users regarding a feature in the second iteration and how we consequently modified it in the third iteration.

One of our ideas to improve the book evaluation experience was to provide the user with optional question prompts to aid their review-writing process. Much like in popular dating apps (e.g., Hinge), these prompts could potentially be used to spark a train of thought among readers so that they could better articulate their thoughts or opinion of a book.

One idea involved a separate “Choose a prompt” page, which readers would navigate to using a secondary button on the evaluation screen.

The second idea involved a carousel of prompts directly situated within the evaluation screen, which users could then interact with by tapping the arrows on either side of the prompt.

The yellow shading is used in these examples to merely highlight the discussed features.

Ultimately, the users found value in both versions. The first idea provided users a comprehensive list that was easier to dig through. The second enables users a quick and convenient way to access the prompts without needing to navigate to a new page.

Together with the users, we devised a solution to combine the versions, resulting in the updated feature shown below. In our third iteration, users are presented with the carousel on the evaluation page but can also access the comprehensive list of prompts when tapping on the prompt displayed in the carousel. Users can then select a prompt from the comprehensive list, which will return them to the evaluation page with the selected prompt now displayed in the carousel.

More wireframes from iteration 3

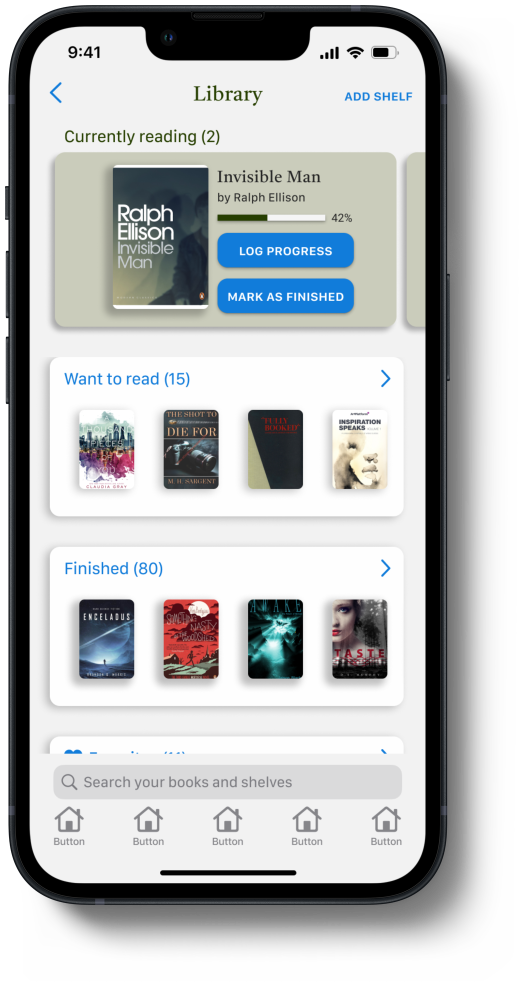

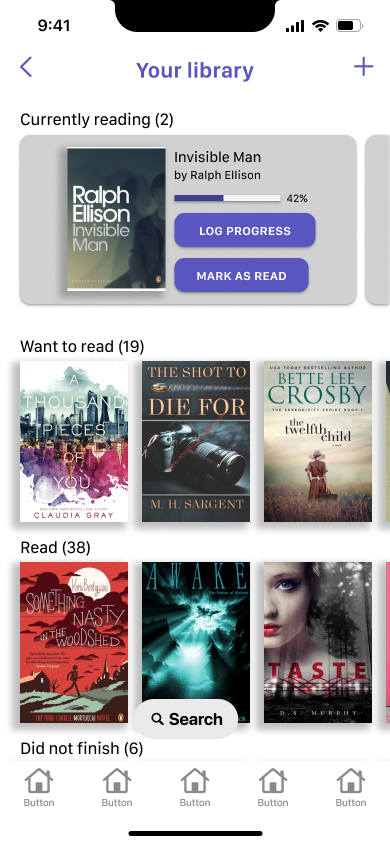

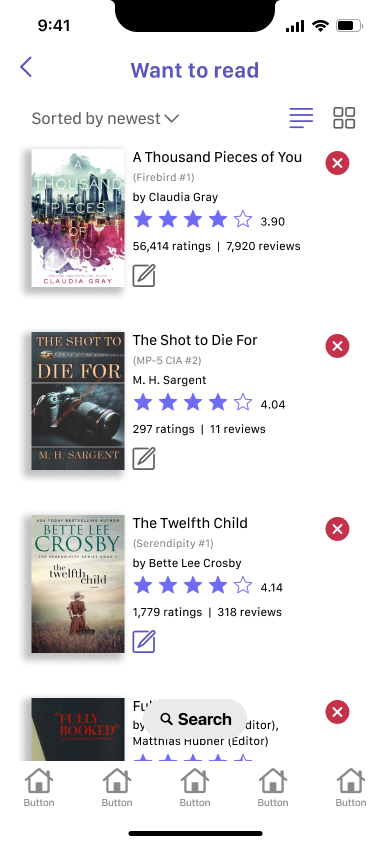

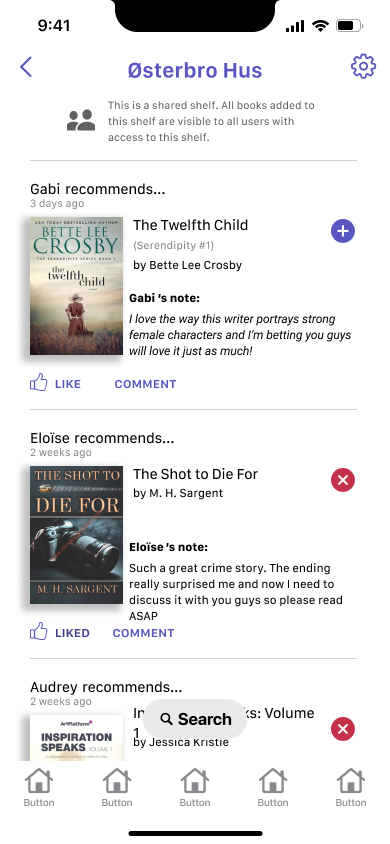

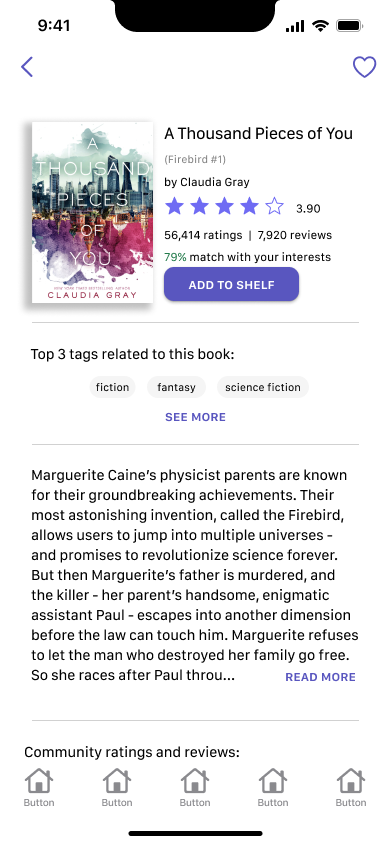

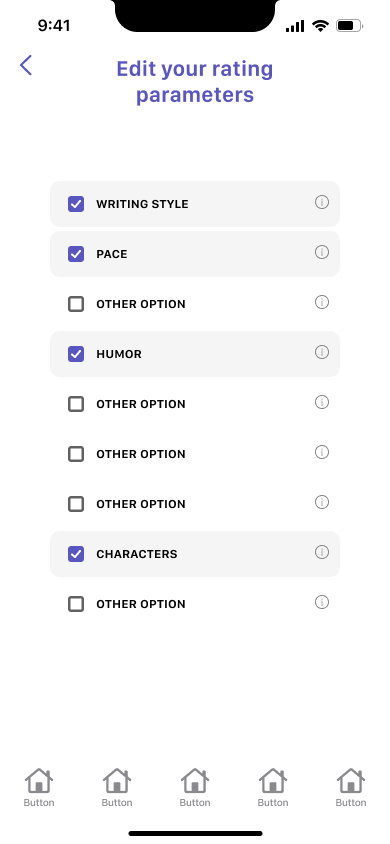

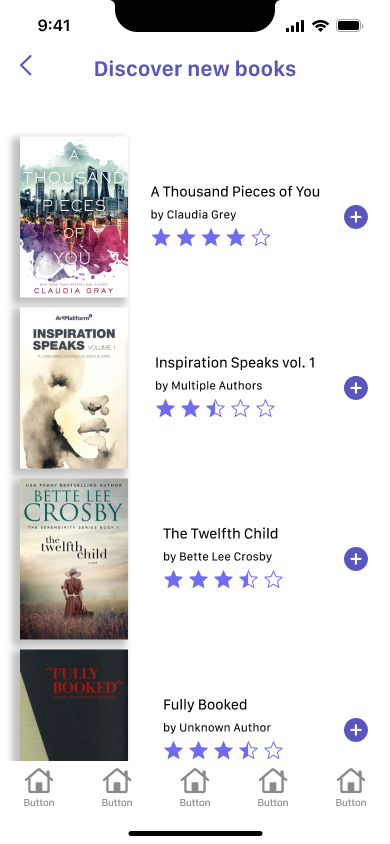

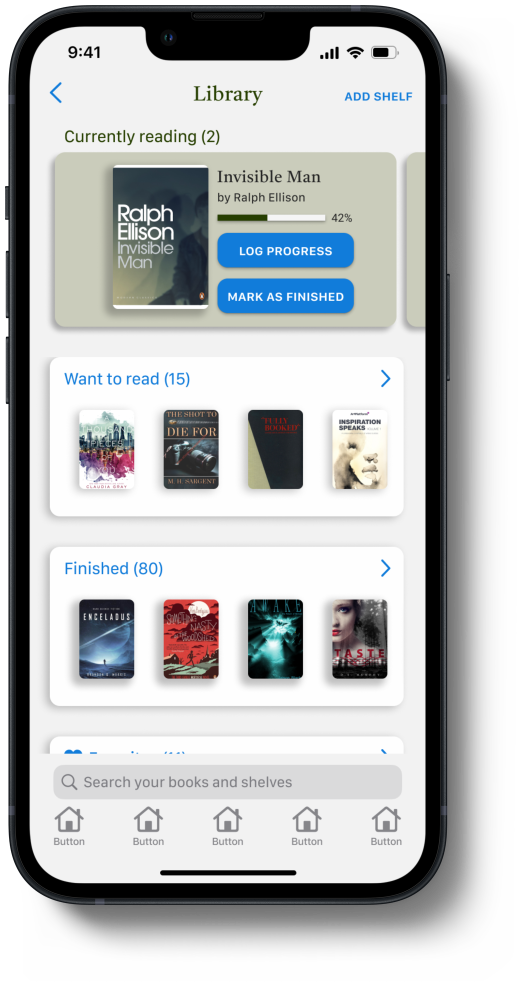

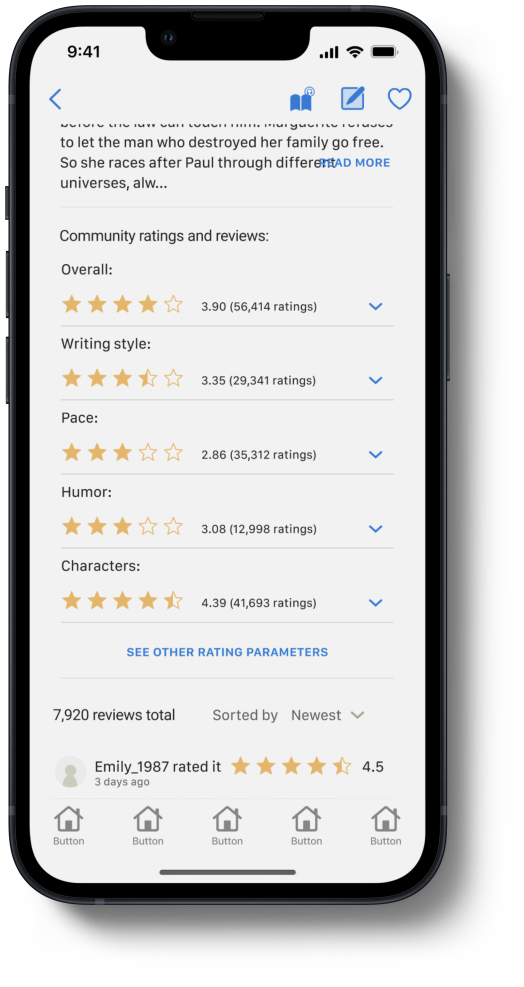

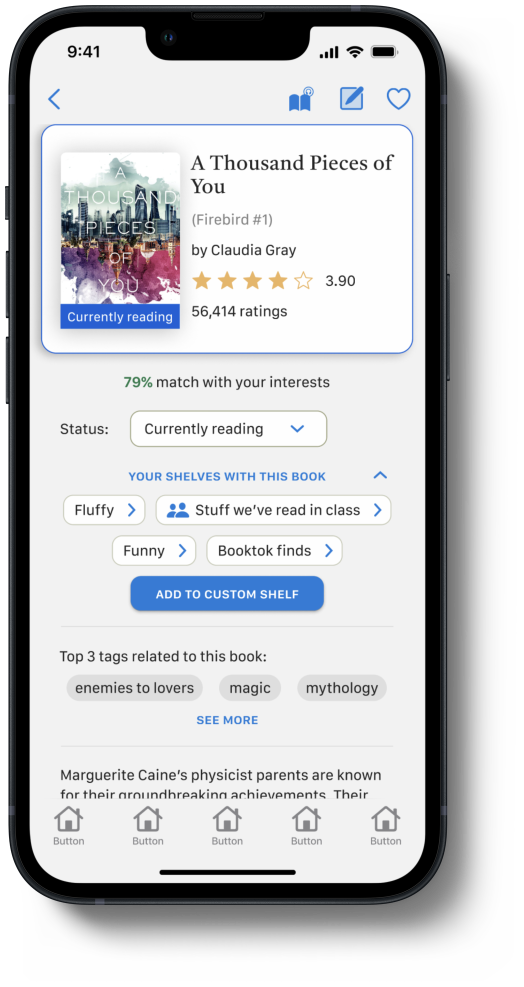

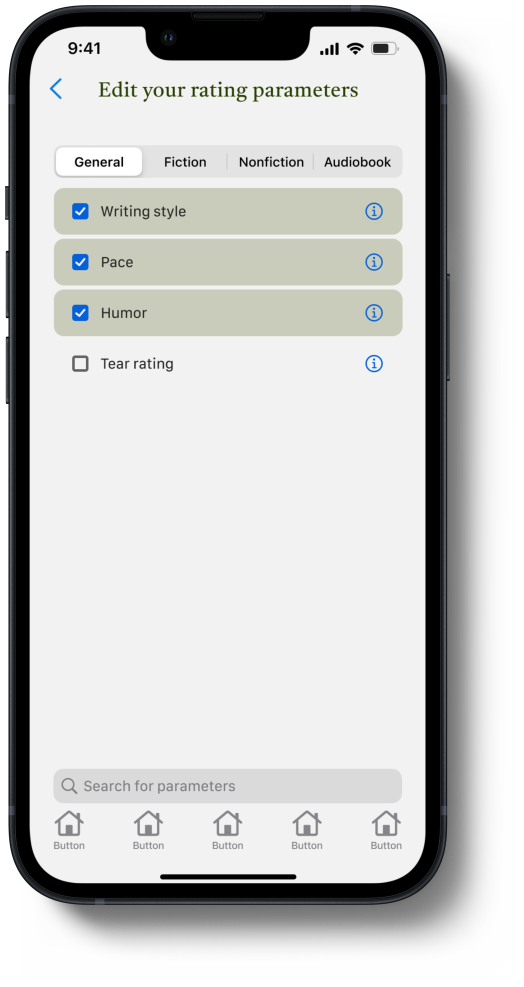

The following screens showcase the fifth and final iteration of our solution, which focuses specifically on the collection management and book evaluation processes in book-tracking, and is therefore not a complete designed book-tracking app. Descriptions of the key elements in each screen of our solution are provided in the slideshow below the gallery, but can only be read fully in desktop view.

Final prototype

We tested our final interactive Figma prototype with 5 users by doing the following:

Thinking Aloud tests with guided tasks,

3 Surveys each (NASA TLX, SUS, and our own custom survey, which attempts to refer back to the major elements of our specified problem statement).

We also did an assessment of the solution’s usability using Jakob Nielsen’s 10 Usability Heuristics for User Interface Design.

Final testing

Thinking aloud

The following is an example of a Thinking Aloud scenario and its associated tasks given to users during testing.

Scenario: You’ve just finished reading a fictional horror book and felt that it was good overall but lacked in some areas. You want to leave a rating and write a review for your own personal record, but you sometimes struggle to summarize your opinions of books.

Task 1: Access the book’s Evaluation page.

Task 2: Rate the book 3.5 stars overall.

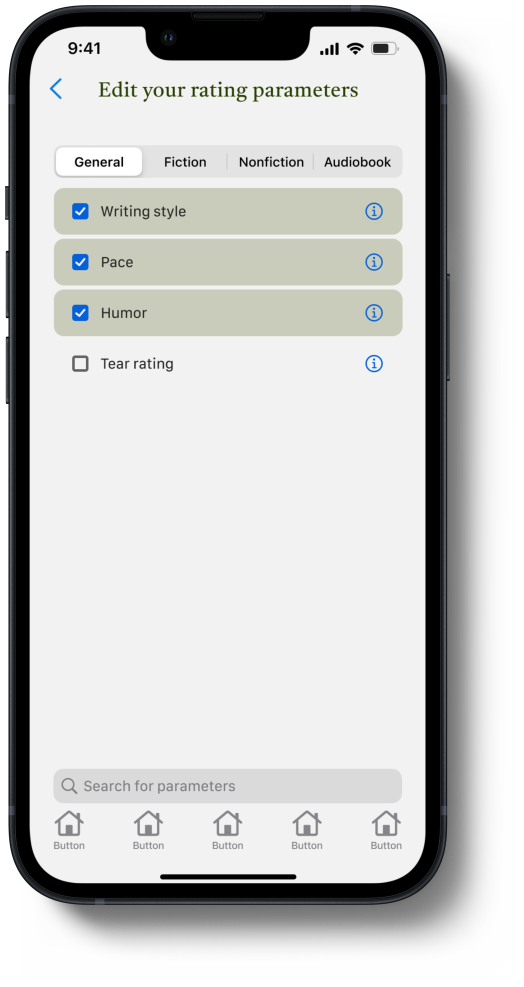

Task 3: Edit your rating parameters to reflect the aspects most relevant to the book you read (de-select the Humor parameter and select Plot twists).

Task 4: Find and select the review prompt How did you enjoy the author’s writing style?

Task 5: Browse other review prompts, just to see what else is there.

Task 6: Edit the privacy settings so that your ratings and review are only visible to you.

Overall, the feedback we received during the Thinking Aloud tests was generally positive. Users felt the prototype was easy to navigate and a clear improvement from Goodreads.

The following expandable lists depict the general feedback comments from the user testing. We acknowledge a number of further improvements we would implement in a future iteration if we had the time to do so.

-

Main Library View:

Users were happy with the aesthetics and functionality.

"Read" shelf was renamed to "Finished," which resolved an issue with ambiguous wording.

Some users had issues with closing the feedback modal after marking a book as "finished" and the placement of the "Currently reading" shelf.

Shelf View (Using "Want to read" shelf as an example):

Users liked the book recommendation feature.

Suggestions to add a way to go back to the previous recommendation and change colors for the recommendation display.

Privacy settings were easy to find and adjust.

Removing a book from a shelf by swiping wasn't explicitly indicated, causing some users to struggle. Consider adding an option for multi-select after long-pressing.

Filtering was confusing to users due to the location of the filter icon. Suggested grouping related elements (sort, search, and filter).

Shared Shelves:

Users were enthusiastic about the idea but some struggled to locate shared shelves among others in the Library page.

Adding books to “Want to Read” shelf from “Shared shelf” was smooth.

Suggested that clicking the "Info" icon should provide guidance on adding users.

Book Info Page (Collection Management):

Users had minor issues with the final design.

Some users did not realize the purpose of the dropdown to change the reading status.

Custom shelves were considered simple to use, but removing a book was confusing; suggested changing the button label to "Edit custom shelves."

Changing the icon of quick-notes and rephrasing tasks improved user experience.

Users successfully changed the book format to an audiobook, but some had reservations about the icon's clarity.

-

Star Ratings:

Users understood the star rating system (tap once for half-filled, twice for fully-filled) without issues.

Some users suggested the ability to drag to fill the stars for added convenience, which was mentioned in previous iterations but couldn't be implemented due to Figma limitations.

Rating Parameters:

Users generally liked the idea of secondary rating parameters.

One user had trouble understanding the concept, assuming they had to input secondary ratings and the overall rating would be calculated automatically.

Suggested adding an "Info" icon and modal to explain the concept of rating parameters.

The menu for selecting rating parameters was clear and understandable to all.

Wording improvement suggested for the search bar to make it clear that it applies to all parameters.

Review Prompts:

Positive feedback on the proposal of review prompts to guide users while writing.

Users had no problems understanding how to use them or what they do.

All users performed tasks related to review prompts without issues.

Specifying the privacy of reviews was clear and straightforward.

Viewing Ratings and Reviews on Book Info Page:

No issues reported when viewing ratings and reviews on the Book Info page.

Users could easily check specific rating parameters by tapping the overall rating or scrolling manually.

Viewing reviews and changing their sorting order was clear and intuitive for all users.

Marking the book as "finished" and navigating to the Evaluation page was effortless for everyone.

Surveys

Along with issuing the NASA TLX and SUS surveys to users, we also created our own custom survey to try to assess how well our solution meets the four objectives listed in our specified problem statement.

The results of the NASA TLX and SUS surveys were favorable, which may give some indication of the usability of the system overall. However, although they provided us with quantitative data to compound the qualitative feedback we obtained, the surveys were not as useful as we hoped they would be. The main issue comes from the lack of data we could use to compare them to and the very limited number of responses.

As for the custom survey, it was also not as informative as we had hoped. The main reason is the lack of any baselines or standard ways of interpreting the scores. The best use of the data from our custom survey was to compare individual scales with each other. Doing this suggests that users believe the aspect we succeeded in the most is ”flexible”, meaning the users felt satisfied with the freedom to express their views and preferences.

Usability heuristics

By analyzing our solution in reference to Jakob Nielsen’s 10 Usability Heuristics for UI Design, we were able to critically assess what works well and what doesn’t. When evaluating how well the solution meets the four objectives mentioned in our specified problem statement, the Usability Heuristics allowed us to more precisely isolate the issues in our design, giving us a more clear idea of what should change in a future iteration. In retrospect, we wished we had implemented this assessment early in our design process rather than at the very end.

In summary, we found that our solution performs reasonably well (although not without fault) in the following areas:

Visibility of system status,

Consistency and standards,

Recognition rather than recall,

Flexibility and efficiency of use,

Help & documentation.

Conversely, we recognized that most improvements could be made in the following categories:

User control and freedom (e.g., with more consistent cancel and undo buttons),

Error prevention (e.g., with a plan to prevent accidentally marking a book as “finished”),

Aesthetic and minimalist design (e.g., with an overall design update).

Closing

thoughts

While our solution is not a full application and is not without limitations, we recognized through testing and closely considering how it fulfills the four objectives in our original problem statement (intuitive, flexible, findable, and easy to navigate) that it provides a compelling example of how we might foster a better user experience in book-tracking. We also see various ways in which our solution would benefit from further development, which suggests that future iterations can have even more promising results, impacting book-tracking as a whole.

The following is a summary of some of my own personal biggest takeaways from this project:

User research takes time (obtaining, processing, and analyzing data) and should not be rushed. It’s worth spending the time to understand users. It should also continue all throughout the design process.

Vague problems lead to vague solutions. A good problem statement is specific without being overly restricting.

Fail fast. Not all ideas are good ideas. It’s better to find this out early on rather than after spending a lot of time developing something.

Be intentional about your testing methods and don’t omit quantitative testing. It gives you more clear indications about which aspects can be improved and how.

MVP vs features. Approaching the problem from a bigger picture perspective rather than hitting individual features in new ways would make the solution stand stronger as a whole.